Warp3D Nova: 3D At Last - Part 1

Yes! It's finally time to draw in 3D. Actually, you're not going to get far without a basic understanding of 3D geometry. So there's still some theory to get through. Feel free to skip this if you already know what model, view and projection matrices are. Otherwise, pay close attention.

I'm going to focus on core concepts rather than the underlying mathematics. Going into the mathematics would take too long, and obscure the key concepts. That said, a good understanding of matrix algebra would be very helpful. Check out Bradley Kjell's tutorial to learn about matrix/vector mathematics for graphics:

http://chortle.ccsu.edu/vectorlessons/vectorindex.html

From 3D Model to 2D Image

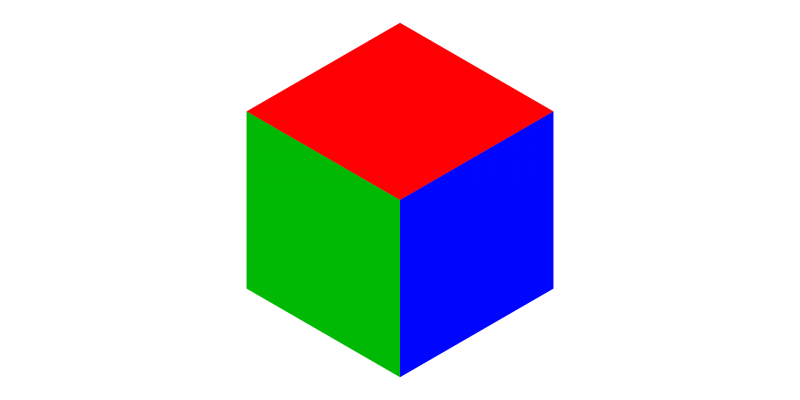

To render a convincing 3D scene objects must be projected onto a 2D screen via a simulated camera. Each object has its own position and orientation (a.k.a., pose) in the virtual world, as does the camera. So, a model's triangles/surfaces must be transformed through multiple spaces before they finally become pixels on a screen. The transformations are illustrated below.

The Model Matrix

As I said earlier, each object has a position and orientation (pose) in 3D space. The object's shape is built out of vertices and triangle's,** and our 3D renderer needs to project those vertices onto the screen. The first step is the model matrix.

The model matrix stores the object's pose. Actually, that's not quite accurate; it's a 3D transformation. Multiplying vertex positions by the model matrix transforms them from object space to world coordinates:

pw = Mpo

So vertex point po is multiplied by model matrix M which transforms that point to its location in world space, pw. This is exactly what our renderer needs to do. So it's the object's pose stored in a format that the renderer can use directly (see below).

** NOTE: 3D models don't have to be built using vertices and triangles, but this is the most common method for graphics, especially real-time graphics such as in games.

The View Matrix

Having vertices in world space is nice, but we really need to know where they are relative to the camera. So they need to be transformed again. This is where the view matrix comes in.

The view matrix transforms vertices from world to camera coordinates. Like the model matrix, it represents the camera's pose. However, it's the inverse transformation compared to the model matrix, because it transforms points from world coordinates. By contrast, model matrices transform to world coordinates (see below).

Multiplying by both the model and view matrices transforms vertices from model coordinates into camera coordinates:

pv = VMpo

As you can probably guess, V is the view matrix and pv is point po relative to the camera's origin (i.e., in camera coordinates).

Projection Matrix

The projection matrix is our virtual camera's lens. It projects vertices into clip space (also called Normalized Device Coordinates (NDC)). This is a two step process. First pv is multiplied by the projection matrix (P):

pc = Ppv = PVMpo

The result is a vector with the following values:

- x - the horizontal position

- y - the vertical position

- z - the depth value

- w - the actual distance to the point along the z-axis

Next, 3D to 2D projection is performed by dividing all values above by w.

Why clip space? Well, clip space makes it easy for the GPU to discard any points that are not visible. Warp3D Nova uses the same clip space as OpenGL. All visible points lie within a unit cube (i.e., x, y, & z values lie within the range [-1,1]). Triangles completely outside the clip cube can't be seen by the camera, and are discarded. There's no point in wasting any more processing power on something that the camera can't see.

The Special Z and the Depth Buffer

It may seem strange to have both a depth value (z) and a z-axis distance (w). Aren't they the same? Not exactly. The depth value used to determine which surface is closest to the camera. Objects that are closest to the camera must always appear in front of those that are farther away.

Depth testing is performed by using a depth buffer. This buffer records the depth (z) for every pixel. When a triangle is rendered, the GPU checks whether it's closer to the camera than any previously rendered surfaces. The GPU then only draws the triangle to pixels where it's the closest to the camera.

A separate depth value is used because the projection calculation must be done at high precision (using w) whereas the depth buffer could have limited precision (e.g., it might be 16-bit rather than 32-bit). Clipping the depth value to [-1,1] ensures that it can be scaled to fit the depth buffer regardless of precision.

The MVP Matrix

The model, view and projection matrices are merged beforehand, creating the MVP transformation matrix:

MMVP = PVM

That way vertices only need to be multiplied by one matrix instead of three; a 3x saving in processing power. Notice how the matrix order is from right-to-left. This is because the position vector is to the right, and so is multiplied by the right-most vector first.

The Viewport Transform

After points have been clipped, they're transformed to screen/pixel coordinates. You won't need to calculate this transformation yourself. Warp3D Nova's SetViewport() (or glViewport() in OpenGL) calculates this for you.

This is the final transformation. The 3D object has been projected onto the 2D image, and can now be drawn to screen. At this point the triangles are converted to pixels, and the fragment shader is run for each pixel.

Conclusion

3D rendering involves several 3D transformations. The model matrix represents the object's pose. It transforms vertices into world coordinates. Next, the vertices are transformed again into the camera's view space From there the projection matrix is used to project them into clip space, where pixels that aren't visible are clipped away. Finally, the vertices are transformed into screen/pixel coordinates. 3D to 2D projection is complete, and the individual triangles are drawn, generating the final image.

Next time I'll show you how to create and use the matrices with Warp3D Nova. Then, we'll finally draw something in 3D. I promise.

Post your comment